NND¶

- class frlearn.data_descriptors.NND(dissimilarity: str or float or Callable[[np.array], float] or Callable[[np.array, np.array], float] = 'boscovich', k: int or Callable[[int], float] or None = 1, weights: Callable[[int], np.array] | None = None, proximity: Callable[[float], float] = <function shifted_reciprocal>, nn_search: NeighbourSearchMethod = <frlearn.neighbours.neighbour_search_methods.KDTree object>, preprocessors=(<frlearn.statistics.feature_preprocessors.IQRNormaliser object>,))[source]¶

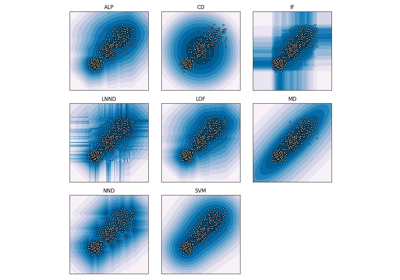

Implementation of the Nearest Neighbour Distance (NND) data descriptor, which goes back to at least [1]. It has also been used to calculate upper and lower approximations of fuzzy rough sets, where the addition of aggregation with OWA operators is due to [2].

- Parameters

- dissimilarity: str or float or (np.array -> float) or ((np.array, np.array) -> float) = ‘boscovich’

The dissimilarity measure to use.

A vector size measure np.array -> float induces a dissimilarity measure through application to y - x. A float is interpreted as Minkowski size with the corresponding value for p. For convenience, a number of popular measures can be referred to by name.

The default is the Boscovich norm (also known as cityblock, Manhattan or taxicab norm).

- kint or (int -> float) or None = 1

Which nearest neighbour(s) to consider. Should be either a positive integer, or a function that takes the target class size n and returns a float, or None, which is resolved as n. All such values are rounded to the nearest integer in [1, n]. If weights = None, only the kth neighbour is used, otherwise closer neighbours are also taken into account.

- proximityfloat -> float = np_utils.shifted_reciprocal

The function used to convert distance values to proximity values. It should be be an order-reversing map from [0, ∞) to [0, 1].

- weights(int -> np.array) or None = None

How to aggregate the proximity values from the k nearest neighbours. The default is to only consider the kth nearest neighbour distance.

- preprocessorsiterable = (IQRNormaliser(), )

Preprocessors to apply. The default interquartile range normaliser rescales all features to ensure that they all have the same interquartile range.

Notes

k is the principal hyperparameter that can be tuned to increase performance. Its default value is based on the empirical evaluation in [3].

References