FRPS¶

- class frlearn.instance_preprocessors.FRPS(owa_weights: ~typing.Callable[[int], ~numpy.array] = ReciprocallyLinearWeights(), quality_measure: str = 'lower', aggr_R=<function mean>, dissimilarity: str = 'boscovich', nn_search: ~frlearn.neighbours.neighbour_search_methods.NeighbourSearchMethod = <frlearn.neighbours.neighbour_search_methods.KDTree object>)[source]¶

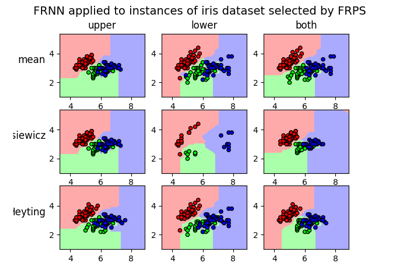

Implementation of the Fuzzy Rough Prototype Selection (FRPS) preprocessor.

Calculates quality measure for each training instance, the values of which serve as potential thresholds for selecting instances. Each potential threshold and corresponding candidate instance set is evaluated by comparing the decision class of each instance with the decision class of its nearest neighbour within the candidate instance set (excluding itself). The candidate instance set with the highest number of matches is selected.

- Parameters

- quality_measurestr {‘upper’, ‘lower’, ‘both’, }, default=’lower’

Quality measure to use for calculating thresholds. Either the upper approximation of the decision class of each attribute, the lower approximation, or the mean value of both. [2] recommends the lower approximation.

- aggr_R(ndarray, int, ) -> ndarray, default=np.mean

Function that takes an ndarray and a keyword argument axis, and returns an ndarray with the corresponding axis removed. Used to define the similarity relation R from the per-attribute similarities. [1] uses the Łukasiewicz t-norm, while [2] offers a choice between the Łukasiewicz t-norm, the mean and the minimum, and recommends the mean.

- owa_weights: OWAOperator, default=ReciprocallyLinearWeights()

OWA weights to use for calculation of soft maximum and/or minimum in quality measure. [1] uses linear weights, while [2] uses reciprocally linear weights.

- dissimilarity: str or float or (np.array -> float) or ((np.array, np.array) -> float) = ‘boscovich’

The dissimilarity measure to use.

A vector size measure np.array -> float induces a dissimilarity measure through application to y - x. A float is interpreted as Minkowski size with the corresponding value for p. For convenience, a number of popular measures can be referred to by name.

The default is the Boscovich norm (also known as cityblock, Manhattan or taxicab norm).

- nn_searchNeighbourSearchMethod = KDTree()

Nearest neighbour search algorithm to use.

Notes

There are a number of implementation differences between [1] and [2], in each case the present implementation follows [2]:

[1] calculates upper and lower approximations using all instances, while [2] only calculates upper approximation membership over instances of the decision class, and lower approximation membership over instances of other decision classes. This affects over what length the weight vector is ‘stretched’.

In addition, [2] excludes each instance from the calculation of its own upper approximation membership.

[1] uses linear weights, while [2] uses reciprocally linear weights.

[1] uses the Łukasiewicz t-norm to aggregate per-attribute similarities, while [2] recommends using the mean.

In addition, there are implementation issues not addressed in [1] or [2]:

It is unclear what dissimilarity the nearest neighbour search should use. It seems reasonable that it should either correspond with the similarity relation R (and therefore incorporate the same aggregation strategy from per-attribute similarities), or that it should match whatever dissimilarity is used by nearest neighbour classifition subsequent to FRPS. By default, the present implementation uses the Boscovich norm on the scaled attribute values.

When the largest quality measure value corresponds to a singleton candidate instance set, it cannot be evaluated (because the single instance in that set has no nearest neighbour). Since this is an edge case that would not score highly anyway, it is simply excluded from consideration.

References

- 1

- 2